Configuration

A bit of context about Search Indexes

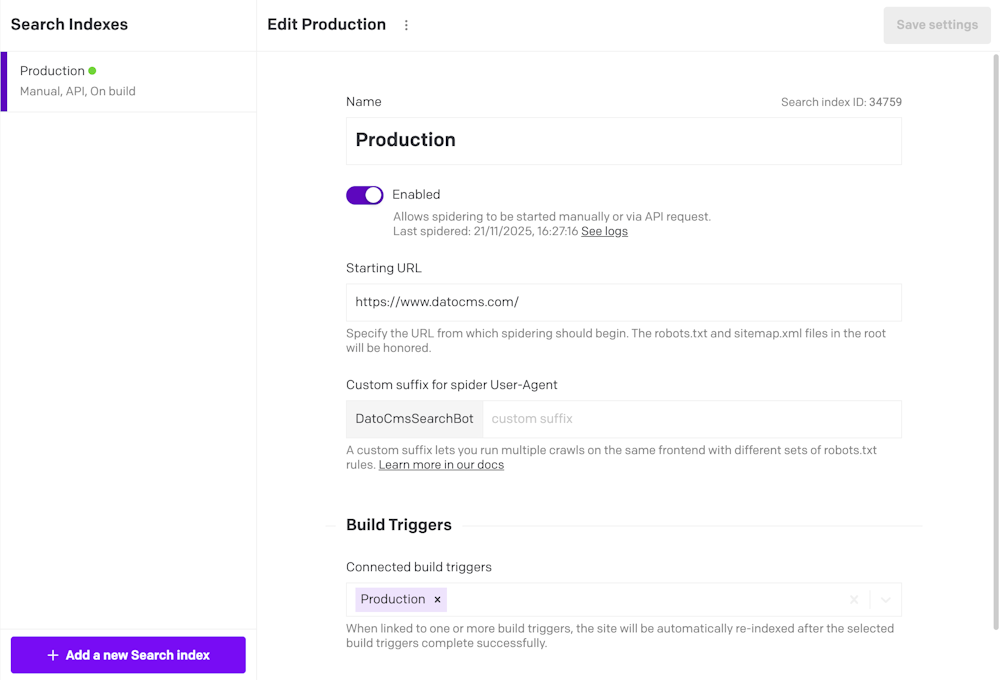

The way you configure Site Search involves the concept of search index. Search indexes tell DatoCMS to index some website: by specifying a starting URL and some other intuitive parameters, it's possible to quickly index your website and provide a tailored search experience to your website visitors.

Since the content of a DatoCMS project can be read and used on multiple frontends, multiple search indexes can be created in a single project.

Once a search index is configured, it is possible to:

Command the spidering of a website directly from the DatoCMS interface;

Link one or more search index to any build trigger, so that each time the frontend is rebuilt, the crawling of the site and re-indexing of its pages starts;

The configuration of the search index is as easy as possible: you specify a starting URL, and you're usually good to go. You can also specify a custom user-agent suffix for the crawler; by configuring the robots.txt file and your sitemaps properly, you can even generate completely independent search indexes for different parts of your website.

Creating a Search Index

Go to the Project Settings > Search indexes section of your project;

Click on the Add a new Search index button

Give the index a name and specify your Starting URL: that's the address from which crawling will begin;

Press the Save settings button.

You can also link the search index to one or more build triggers: in that case, the crawling will start every time the deployment completes successfully.

Anytime you want, you can also trigger a respidering of your website directly from CMS or using a specific CMA endpoint.

Inspecting crawling results

Once the crawling of the website ends, in the Project Settings > Search index activity section, you'll see a Site spidering completed with success event in your log.

Clicking on the Show details link will present you the complete list of spidered pages.