A new status page: a small step towards greater transparency

January 20th, 2019

Posted on March 7th, 2019 by Massimiliano Palloni

Relationships shape us, make us collectively stronger and more able to achieve goals that we thought impossible. Curiously enough, the same applies to software. The relationship between DatoCMS and one of our repeat customers and frequent collaborators, Jeff Escalante, has allowed our CMS to grow, improve and respond to the needs of a fast-growing company like HashiCorp.

Jeff has been a staunch believer in static development for years, and he's made it a signature approach in his work in both of his last two roles.

We've been fortunate to partner with Jeff for quite a while, first at Carrot and now with the team at HashiCorp, so we asked him to sit down with us for an informal chat. Read below to find out what spell-slinging secrets we have managed to extract from him along the way.

Let's pretend we don't know you, Jeff. What is HashiCorp and what do you work on?

Why did HashiCorp choose DatoCMS?

Well, it's a long story, actually! My previous job was at an agency called Carrot (now Virtue), we specialized in advanced static builds. We also had plenty of experience in managing multiple websites under single larger brands.

As an agency, every job was different from the last, and we needed tools that could be recycled for different projects, to keep our staff productive and foundations consistent. In choosing them, we had to find a careful balance between quality and flexibility. We needed our tools to be trustworthy but also able to cope with a wide variety of situations without falling apart.

Choosing the right CMS for an agency does seem like a tricky proposition.

Yes, it does. Some CMSs are very good, but their user experience is fairly confusing, especially as you get to more complex scenarios with a lot of linked relational records. Finding the right pricing scheme is also problematic: some solutions are geared exclusively towards enterprises, so it's almost impossible to use a CMS for a smaller organization or product without taking very high risks. And others are aimed more at very small companies, so enterprises are reluctant to trust them. Having to navigate this divide was something that often put us in a really uncomfortable position with our clients.

Which features were the most important for your agency?

Agency clients are often demanding and if something can be asked for, it will. So we needed flexibility, sure, but we also needed really good control over validation. A lot of CMSs are not built to be put in the hands of non-technical clients for extended periods of time, so you'll watch a site crumble as a result of clients slowly breaking all the rules, like uploading a 10 MB image or pasting three paragraphs of text in a headline. We took great pride in building high quality software that would last, so we couldn't let this happen.

Then you adopted DatoCMS…

Yes, we ended up finding a really good balance in Dato. It has a lot of flexibility, a good pricing range that accommodates both large and small projects, a great user experience even with deeply linked records, and a very thorough API.

From that point on, most of the projects that came in at Carrot were built with DatoCMS. At one point, we pitched to HashiCorp to become a client — they wanted a static site and a headless CMS to manage multiple different properties, sharing assets, design and code through a wide range of products, which is right in the zone of projects we loved. We did end up partnering with them and after an exhaustive research and evaluation process, moved forward with Dato, which was exciting for us both.

A few months later, some structural changes within the company resulted in Carrot being absorbed into Virtue and the development department scaling down. Around this time, HashiCorp came in and offered me a position on their digital team, which I happily accepted as we had a great relationship and I had been doing exciting work on their web properties.

Did you end up bringing DatoCMS with you?

I sure did! During my time at Carrot, we rebuilt hashicorp.com from scratch, using Dato as the CMS. When I switched from working for HashiCorp through Carrot to working for them directly, the team and I continued to invest deeper into improving the site and its integration with Dato.

How was the transition to DatoCMS for HashiCorp?

The DatoCMS transition process is something that has always attracted us from the beginning, because its API is one of the most extensive among the many headless CMSs I have evaluated.

The fact that it was so thorough made us a lot more confident in making the switch: I knew that anything that existed within the old CMS could be migrated over by writing a script.

So while the migration was tough and took us a while, as any major system transition will, it was mostly because of issues we had with our previous CMS — everything about the integration with DatoCMS was quite smooth.

What is your current static site generator of choice?

We are currently using Middleman, a really great piece of software in general and an excellent static generator. But as the company continues to grow, we have added more and more pages that we're generating and more shared React components that comprise the majority of our sites' markup. Working with an extensive React-based component system on top of a Ruby based static site generator is quite difficult. It has required us to implement several hacks to work around the transition between Ruby and Javascript, and the build time is very slow because of this. So at the moment, we are in the middle of a pretty extensive refactor and we need to move to a JavaScript-based static site generator in order to get a closer integration for these components.

At the moment, we are planning on building a more opinionated configuration layer on top of Next.js, and taking it from there, and it has been a really big improvement in our proof of concept testing so far.

Why Next.js?

There are a few big reasons. First, since we have this global shared component system built with React, it makes sense for us to use an architecture that is based on Javascript and integrates well with React. We have a lot of sites with a lot of pages, so in order to keep performance strong, we prefer a server-rendered architecture with code splitting, which is exactly what Next gives us.

On top of that, you can generate a static site or run your own node server with exactly the same codebase. This feature is killer: it gives us the flexibility to start simple, and not worry about massive refactors or technology changes as projects begin and mature, also allowing us to maintain different services using the same foundation.

For example, our docs pages are likely to stay static for a while, but other sites we work on like our learning and community portals will have user logins from the get-go and be better fit as dynamic sites. With Next, we can use the same architecture for both of these types of sites, which makes our developers much more productive.

To gain a little flip switch and go from a static to a dynamic site without changing the architecture or changing the platform is really big.

Yeah, that's really the defining feature for us with Next.js.

Are there any other interesting things you're doing with the new architecture?

Yeah actually, we just finished the prototypes of our data loading infrastructure, and we're really taking advantage of DatoCMS's GraphQL API for this. Previously, we would pull all of the data from DatoCMS then build the site using that, but as we added more and more relational content, this process got slower, to the point where just requesting a full copy of all our data would take a couple minutes. On top of that, we have a lot of sites that don't use all the data we have in the CMS – they might just pull the data to render our shared footer, which appears on all sites. It was a big waste of time.

GraphQL really takes care of that concern for us, and lets us very quickly go to DatoCMS to pull just what we need for the site being built, while still taking advantage of Dato's first-class relational records, which we utilize heavily for things like holding on to links between companies, products, talks, videos, blog posts, and everything else to make filtering and finding what you want easy for users, and surfacing related content easy for us.

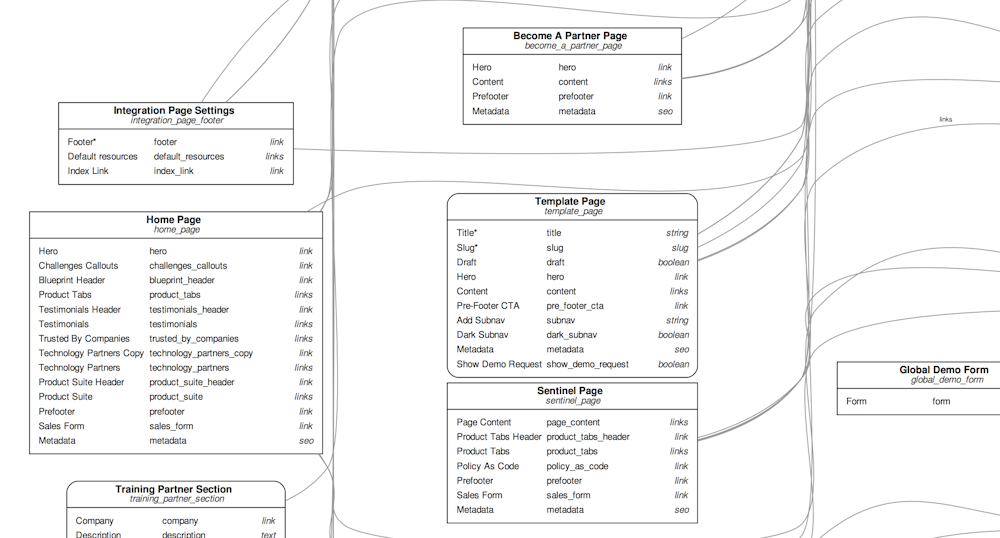

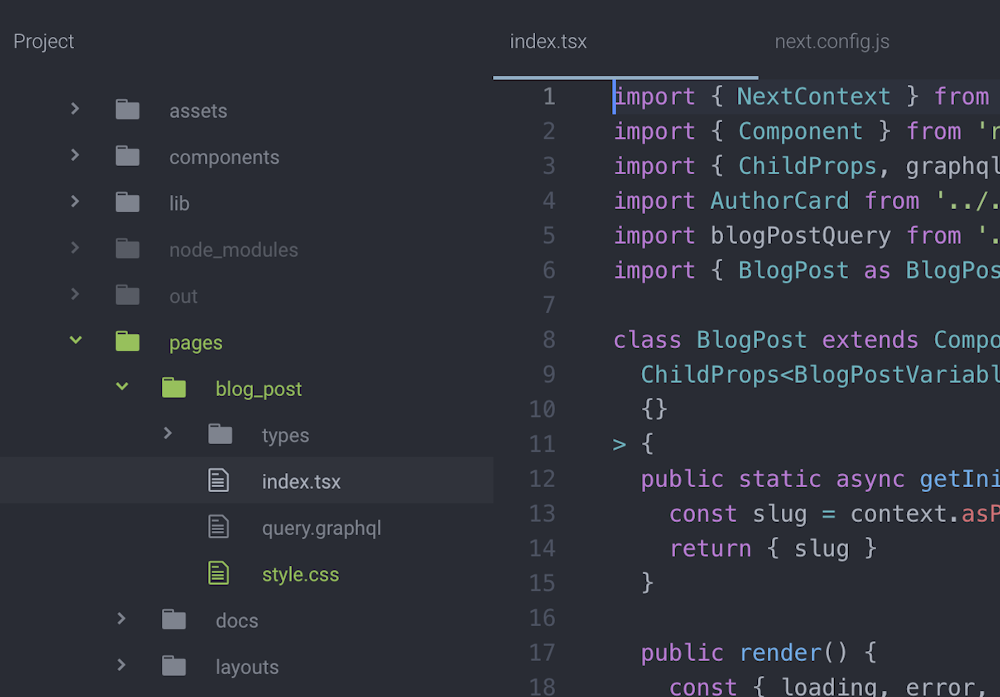

Right now, each page sits in a folder with a couple files: a JS React component for the markup, a CSS file for the styles, a GraphQL file that contains the query for the data the page needs, and typing for TypeScript. This structure is wonderful — concerns are nicely separated but still easily found in the same place. When the page renders, we go to DatoCMS and get the data needed to render it.

The TypeScript thing is also really great and takes advantage of how good a job DatoCMS has done with the GraphQL API. We have a script set up to call Apollo Codegen, which looks at our components and Dato's GraphQL schema, then it will automatically generate typings for our components and the data it uses. So we get the benefits of strict type checking without ever needing to write out any types because of these brilliant tools.

There are a lot of people working on the site every day, how are you managing the risk of something going wrong?

We are running HashiCorp's sites through Netlify and it provides a nice layer of security, notifying us if a deploy fails for any reason. If somebody pushes content that our code can't handle or we didn't set up enough validations to catch, the build will fail, no error will go live, and we can fix the issue, add the necessary validations and patch it.

Dato's integration with Netlify is great, it's a little bit easier than any other CMS where you need to manually wire up the webhook , since you just click a button and it connects directly to Netlify.

And on top of this, of course we have a large suite of unit and integration tests, and we also use BugSnag to try to stay on top of any exceptions that occur in production.

Speaking of webhooks, how are you using them?

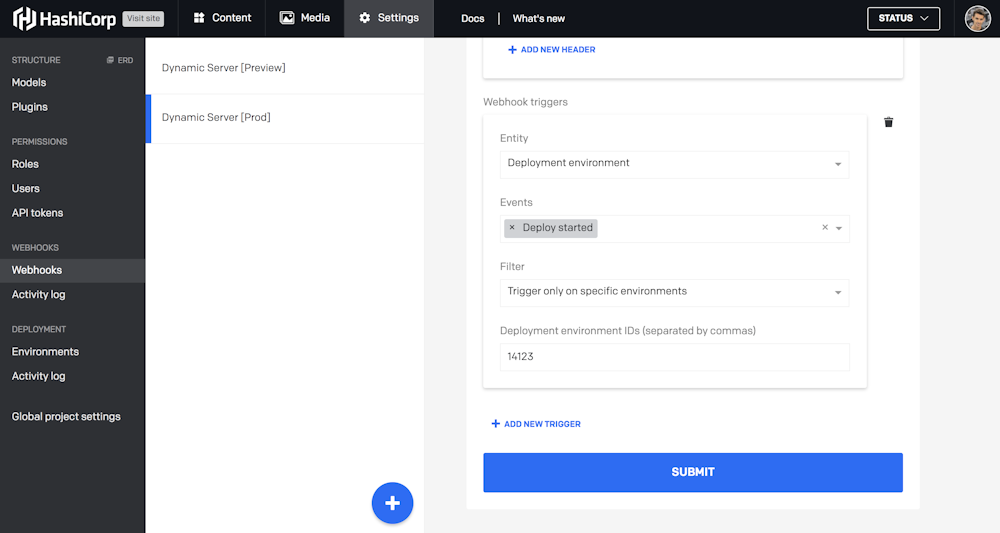

As I said before, the site is growing bigger every day and the compile time is starting to slow down. To cut it down we came up with an interim solution: we have a dynamic server with a good caching system that renders the content-heavy portions of the site, which for us are the blog and the resources page.

Within Dato, we use webhooks to hit that server when there's a deployment so that it can refresh its cache and download the latest from Dato.

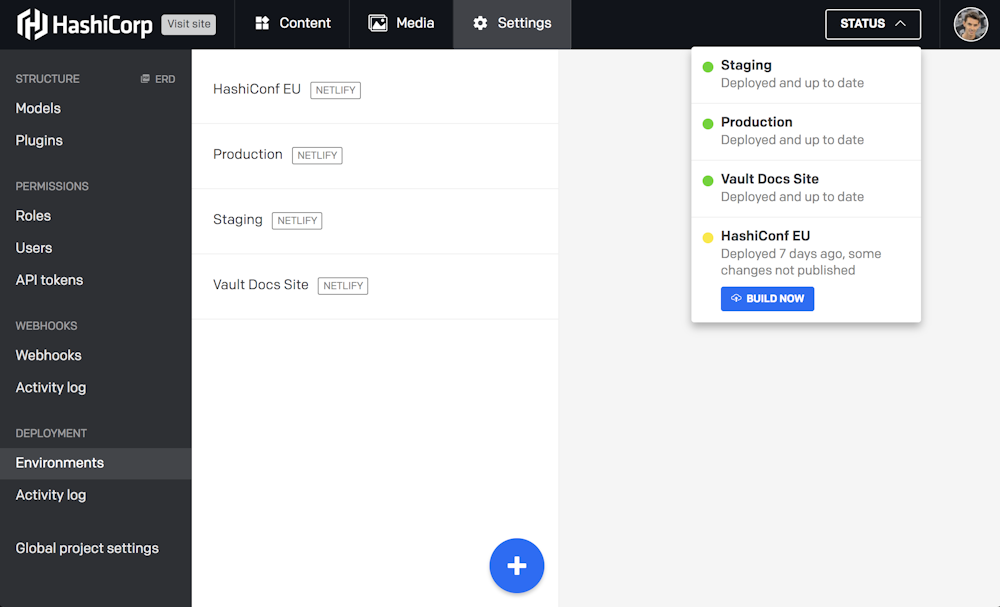

What do you think of our deployment environments?

HashiCorp is moving towards having all of their sites and content consume data from a single DatoCMS instance to promote cross-linking of data and reduce user confusion switching contexts. When that happens we'll be deploying ten or more sites off the same instance of Dato, setting each of our specific product sites as different environments. We already have ~4 deploying from our master instance, and we are constantly working on upgrading more of the company's sites to take advantage of this.

Anyone with access can go to the build menu and create a deploy to the target project site so it will just deploy that site and that site only. Because of this we get quicker deployments, and a reduced risk of something being accidentally deployed to the wrong place. The environment thing is really big for us — we were all super excited when it came out, and immediately implemented it for several sites.

What are you working on right now?

A ton! In addition to our Next.js-based architecture refactor, we've been trying to come up with a system in which we can use a standard set of models to allow non technical people to create pages from scratch — "template pages", as we named them. We are calling this the "section block system", and will definitely do a more detailed writeup as we refine it more.

This type of system is the "holy grail" of CMS-based websites — a system in which non-developers can create new pages that look great, perform well, and satisfy stakeholder needs. It's something that many have attempted, but is incredibly difficult — there's a fine line between too much flexibility, which can lead to poorly designed/engineered or off-brand pages being pushed through without approval, and too strict of user controls, which makes the system kind of useless — if it's not flexible enough to get a wide range of messages across, people will come to the dev team to ask for custom pages instead of using the system.

The problem ultimately lies with trusting your CMS users, right?

That's right, and unfortunately you can't really trust your users to figure things out — you need to set intelligent constraints to keep them from doing anything wrong. Anyone who has worked with CMSs for a while knows that Murphy's law applies here to the fullest: if there's something a user can do in a CMS, they will do it. We have seen every range of attempted hack from putting 1000 words into a headline to changing a jpg file extension to ".svg" just to satisfy an image requirement. So validation is a big part of it.

We're also trying to figure out how to create a system to balance layout orientations of the sections of a page, then within each layout zone have standard components that react well to things around them, and making it so that the only options you can choose are carefully built and tested, on-brand components, but that the components are flexible enough to allow a variety of different types of layouts and presentations.

You should do some DatoCMS plugin in the meantime, you know, for science…

Actually, we're really excited about DatoCMS plugins too! This is a feature that is going to help us out a lot, because we won't have to adapt our system to the way DatoCMS interface works no matter what. With plugins, we can just write our own micro-interface that works best for our specific system and basically embed it within Dato, which is awesome! It's like having a custom built CMS, except DatoCMS takes care of all the hard parts.

We are also thinking about integrating pull in our React component library and doing live content renders within them… I mean, the sky is the limit with that kind of stuff!

We know you're an efficiency monster: what are you doing to cut response times?

We're thinking about a way to do partial cache updates. In order to do this, we would have to get back from DatoCMS both what we have updated in the most recent deploy and a full map of everything that has a relation to every item, so that we could validate accurately. Kind of like a dependency tree, except for data that has been changed in the CMS. With this, we could make really fast and efficient cache refreshes with Dato.

Is it…?

Yes, an additional feature request in the middle of the interview! [laughs]

Well, your ideas have always helped us improve DatoCMS. Some of the features we released, like Scheduled publications and Draft/Published records, were requests we received from you.

When we were doing our initial evaluation for HashiCorp between DatoCMS and other leading headless CMS providers, the importance of a good work relationship was something that came up as a major point , because I had worked with some other CMSs for years and they just didn't listen to our feedback.

When you work with a company this closely, working on the CMS that powers your website, you need a partner that you have really good communication and a great relationship with, to make sure that we're getting stuff done.

We are beyond excited about the close relationship we have built between DatoCMS and HashiCorp. It's really amazing. More than once, we have asked "Hey, can we have this thing?" and next day we get back a response, "Oh yeah, here it is". Unbelievable.

It's astounding that you're able to listen to everybody's feedback, not just ours, and act on it while still being such a small, bootstrapped company. Especially when there are giant competitors with hundreds of employees and million of dollars in the bank that just can't put an ear to the ground and hear what their community is asking.

Aww, thank you!

If you want to know more about Jeff and his work, drop him a line on Twitter. He's always a friendly human being. And a competent, knowledgeable developer, so that's a plus.

In case you want to know more about DatoCMS, try our demo. It's free and super cool, really.

Check out other posts we've written on our Blog